Robocentric Conversational Group Discovery

Accepted to ROMAN2020: The 29th IEEE International Conference on Robot & Human Interactive Communication Proceedings

Viktor Schmuck Tingran Sheng Oya Celiktutan

Department of Engineering, King's College London

|

Abstract

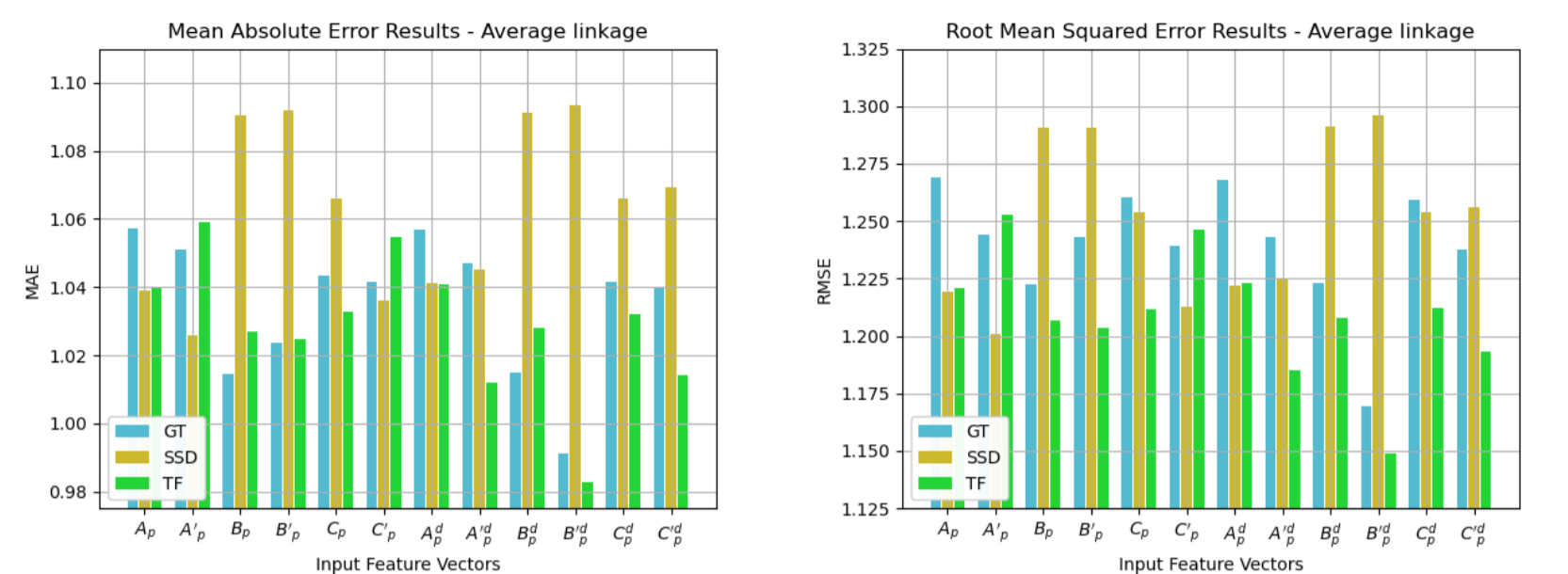

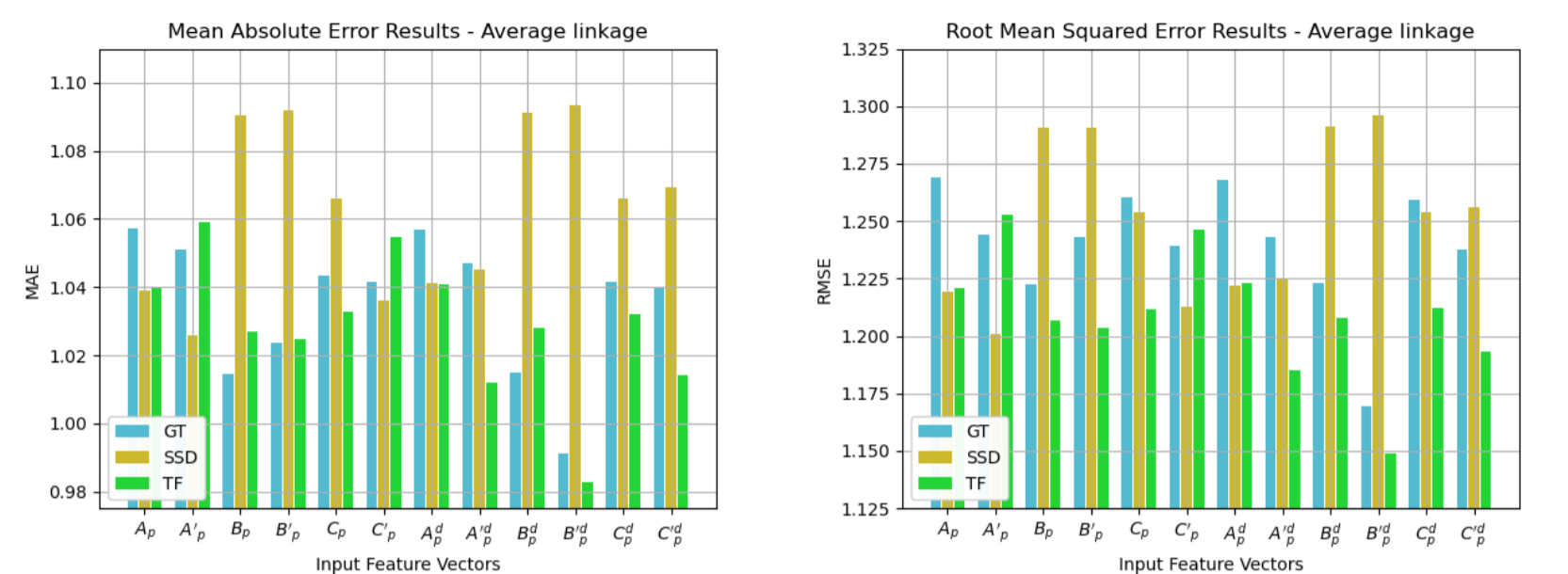

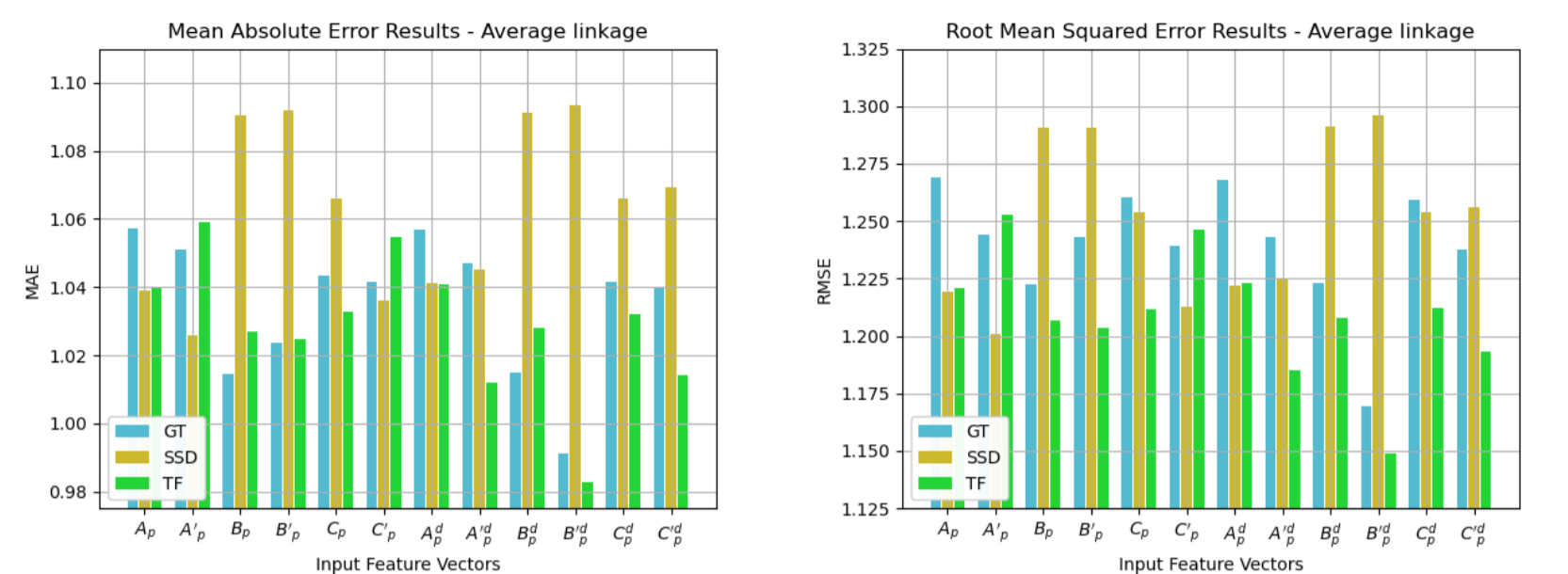

Detection of people interacting and conversing with each other is essential to equip social robots with autonomous navigation and service capabilities in crowded social scenes. In this paper, we introduced a method for unsupervised conversational group detection in images captured from a mobile robot's perspective. To this end, we collected a novel dataset called Robocentric Indoor Crowd Analysis (RICA). The RICA dataset features over 100,000 RGB, depth, and wide-angle camera images as well as LIDAR readings, recorded during a social event where the robot navigated between the participants and captured interactions among groups using its on-board sensors. Using the RICA dataset, we implemented an unsupervised group detection method based on agglomerative hierarchical clustering. Our results show that incorporating the depth modality and using normalised features in the clustering algorithm improved the group detection accuracy by a margin of 3% on average.

|

Paper: [PDF] Proceedings: [Link Coming soon] Data: [Contact SAIR Lab]

|

Bibtex

@inproceedings{ViktorEtAlROMAN20,

author = {Viktor Schmuck and Tingran Sheng and Oya Celiktutan},

booktitle = {The 29th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN)},

title = {Robocentric Conversational Group Discovery},

year = {2020} }