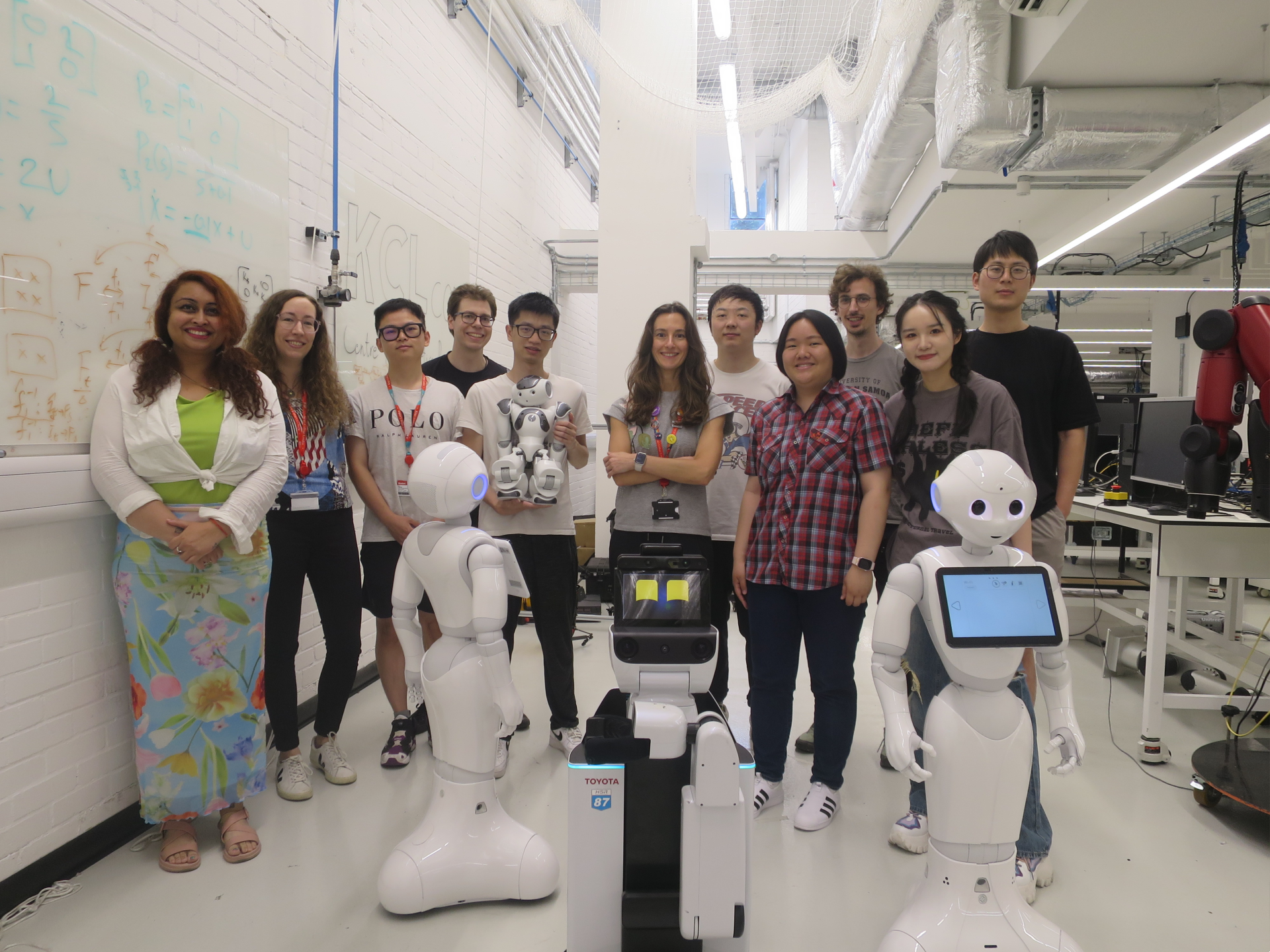

Social AI & Robotics

Laboratory

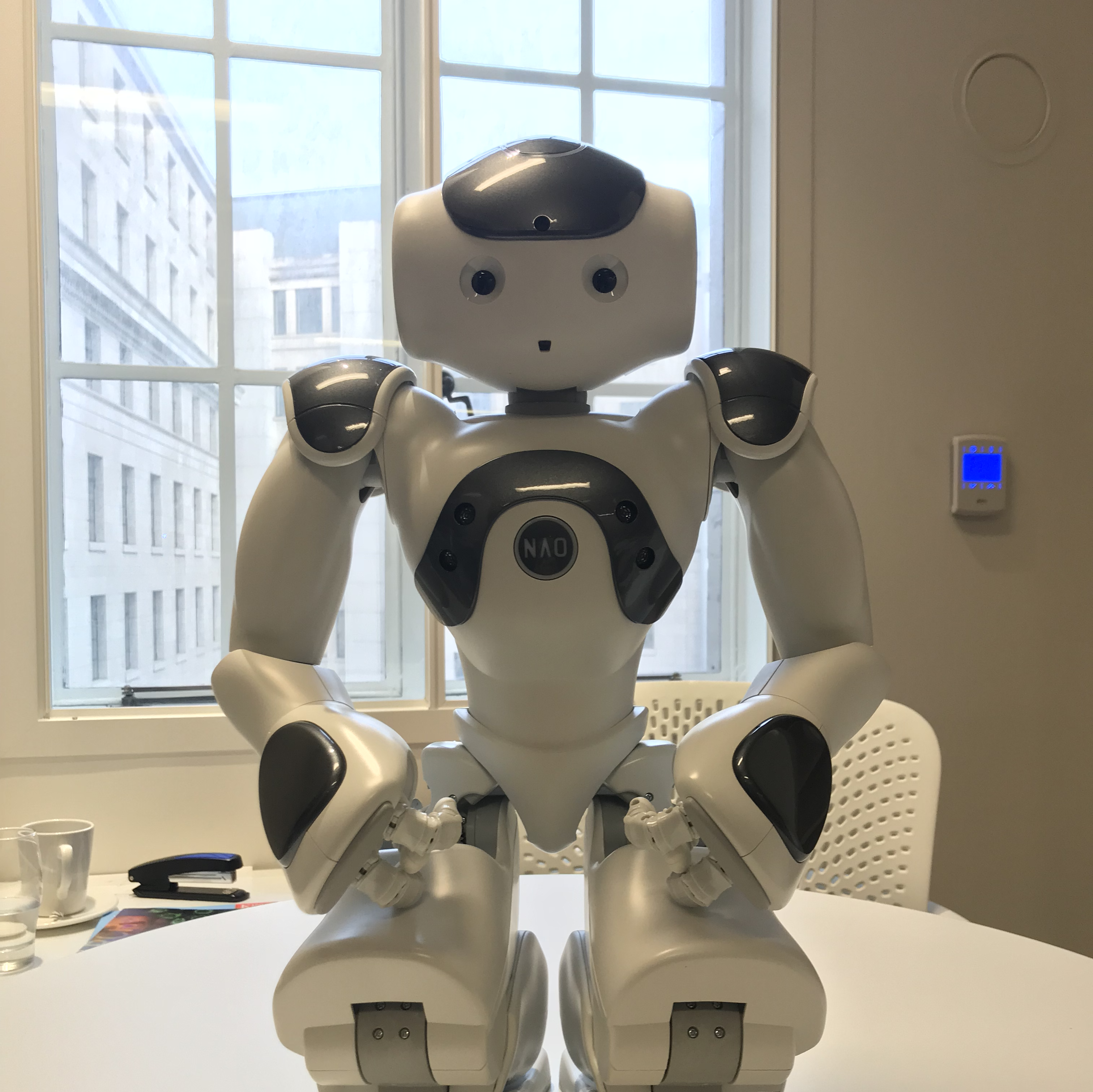

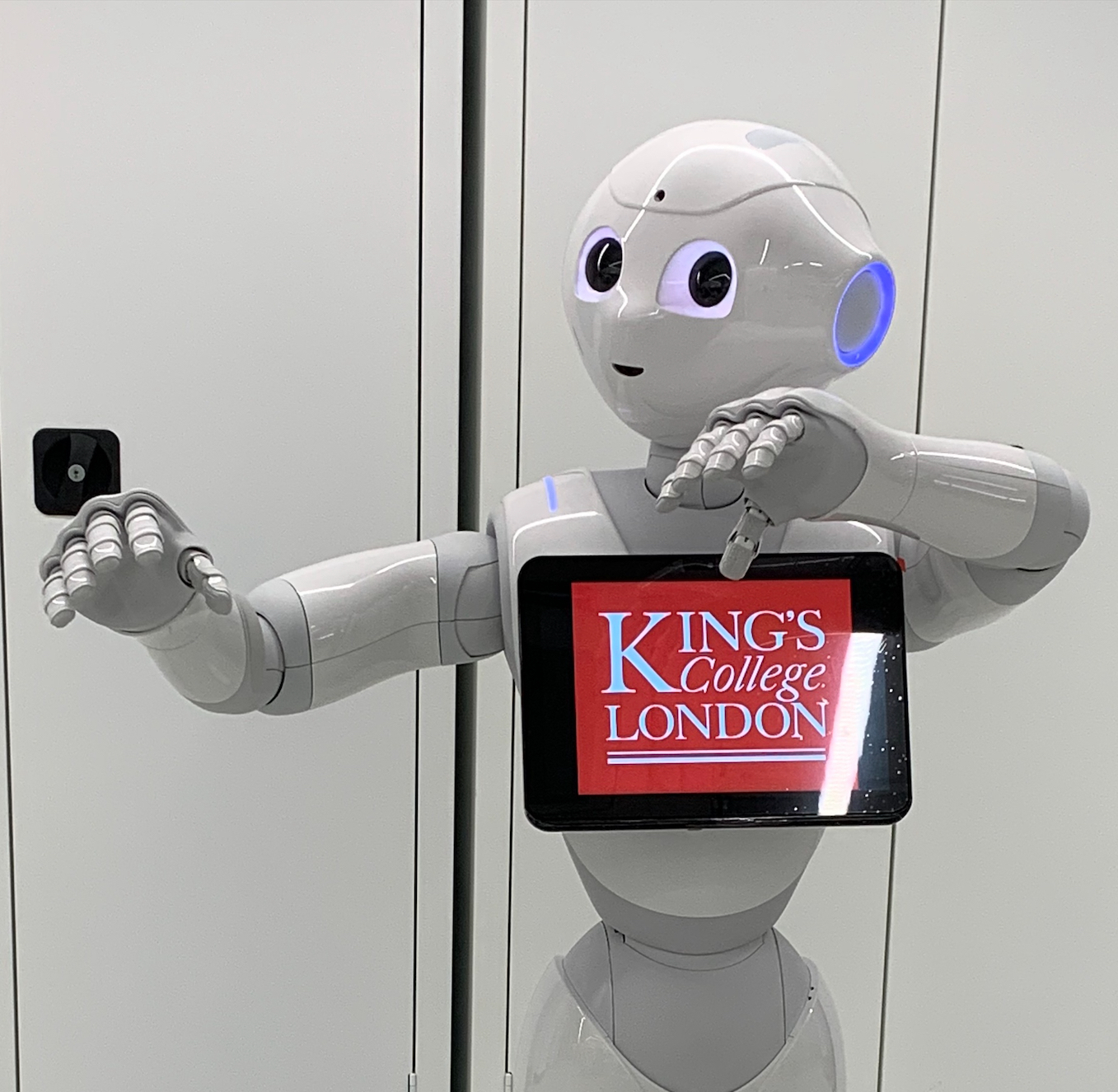

We are part of Centre for Robotics Research (CoRe) within Department of Engineering at King's College London. Our research focuses on machine learning for social artificial intelligence and human-robot interaction. In particular, we are interested in learning multimodal representations of human behaviour and environment from data and integrating such models into the perception, learning and control of real-world systems such as robots. Key application areas include but not limited to autonomous systems, intelligent interfaces and assistive technologies in healthcare, education, public and personal spaces; indeed, any area that demands human-robot interaction.